Asynchronous URL Scanner in Laravel: 7 Powerful Insights for Faster Web Validation

Asynchronous URL Scanner in Laravel — learn how to validate thousands of URLs efficiently using asynchronous processing, chunking, and real-time reporting.

Introduction to Asynchronous URL Scanner in Laravel

In today’s fast-moving digital world, validating large batches of URLs can be a huge challenge—especially when working with thousands or even millions of links. That’s where an Asynchronous URL Scanner in Laravel becomes a game-changing solution. By using asynchronous processing, chunking, and efficient memory handling, developers can drastically reduce validation time while improving the performance and stability of their applications.

Whether you’re cleaning up outdated content, performing large-scale SEO audits, or verifying redirects during a site migration, asynchronous scanning lets you handle everything quickly without dragging down your servers.

Understanding the Need for High-Performance URL Validation

The Bottleneck of Traditional Synchronous URL Checks

Traditionally, URL validation happens in a simple loop: check one URL, wait for the response, then move on to the next. While this works for small lists, it collapses under larger workloads. Network delays slow everything down, and CPU usage spikes when thousands of requests pile up.

Why Asynchronous Processing Changes Everything

Asynchronous execution allows multiple URL checks to run simultaneously. Instead of waiting for each request individually, you can handle hundreds or thousands at once. This reduces the total processing time dramatically and keeps the system responsive.

Breaking Down the Core Logic of the URL Scanner

The provided PHP/Laravel code snippet showcases a well-structured approach to scanning massive URL lists without overwhelming the server.

Loading URLs Efficiently From Excel Files

Using Spatie SimpleExcelReader, the script pulls data from a spreadsheet:

$urls = SimpleExcelReader::create($pathToFile)->getRows();

This enables users to import large datasets from CSV, XLSX, or other compatible formats.

How Chunking Large Data Sets Prevents Overload

Instead of loading thousands of URLs at once, the code breaks them into chunks of 1000:

$urls->chunk(1000)

Chunking reduces memory usage and improves performance.

Using Async Promises for Parallel Scanning

Each chunk is wrapped in an async() call (a lib for async programming in PHP https://github.com/nunomaduro/pokio):

return async(function () use ($row) {

$scanner = new Scanner($row->pluck('url')->toArray());

return $scanner->getInvalidUrls();

});

This allows multiple batches to be processed at the same time, massively improving speed.

How the Scanner Class Works Internally

Identifying Invalid URLs at Scale

Inside the Scanner class, each URL is tested for availability, response codes, and correct formatting. Any failing URL is logged as invalid.

Real-Time Progress Metrics and Reporting

The script outputs clear live progress:

- Total URLs processed

- Valid URLs

- Invalid URLs

- Percentage breakdown

This helps users monitor scans as they happen.

Performance Benchmarking With Laravel’s Benchmark Utility

How Benchmark::dd Helps Detect Slow Operations

Laravel's Benchmark::dd() wraps the entire async execution:

Benchmark::dd(fn () => $promises->each(fn ($promise) => await($promise)));

This prints execution time, helping developers optimize slow operations.

The full example looks like this:

$urls = SimpleExcelReader::create($pathToFile)->getRows();

$resultsOk = 0; $resultsErr = 0;

$totalRowsProcessed = 0;

$promises = $urls->chunk(1000)->map(function ($row) use(&$resultsOk, &$resultsErr, &$totalRowsProcessed) {

return async(function () use ($row) {

$scanner = new Scanner($row->pluck('url')->toArray());

return $scanner->getInvalidUrls();

})->then(function ($invalidUrls) use (&$resultsOk, &$resultsErr, $row, &$totalRowsProcessed) {

$invalidUrlsCount = count($invalidUrls);

$resultsErr += $invalidUrlsCount;

$resultsOk += $row->count() - $invalidUrlsCount;

$totalRowsProcessed += $row->count();

$this->info('');

$this->info('========================================');

$this->info(sprintf('Total URLs processed: %d', $totalRowsProcessed));

$this->info(sprintf('Valid URLs: %d (%.1f%%)', $resultsOk, ($resultsOk / max($totalRowsProcessed, 1)) * 100));

$this->info(sprintf('Invalid URLs: %d (%.1f%%)', $resultsErr, ($resultsErr / max($totalRowsProcessed, 1)) * 100));

$this->info('========================================');

});

});

Benchmark::dd(fn () => $promises->each(fn ($promise) => await($promise)));Benefits of Using an Asynchronous URL Scanner in Laravel

Massive Speed Improvements

By scanning URLs simultaneously, tasks that once took hours may take only minutes.

Reduced Server Load

Async operations prevent resource bottlenecks.

Cleaner, More Maintainable Code

Parallel processing keeps logic organized and performance-focused.

Step-by-Step Flow of the Provided Code Snippet

1. Loading and Chunking the URLs

URLs are read and grouped into manageable blocks.

2. Dispatching Promises in Parallel

Each chunk is processed asynchronously.

3. Error Counting and Reporting

Valid and invalid URLs are tracked and displayed after each processed batch.

Real-World Use Cases of Asynchronous URL Scanners

Website Migration Link Validation

Ensure links remain functional across domains.

SEO Audits and Broken Link Checking

Perfect for agencies handling large websites.

Enterprise-Scale Crawling Workloads

Process millions of URLs without downtime.

Best Practices When Implementing Async URL Scanning

Managing Concurrency Limits

Avoid running too many promises at once.

Handling Timeouts and Failures Gracefully

Retry logic is essential for unstable networks.

Logging and Monitoring Strategies

Use Laravel logs, external monitoring tools, or cloud observability services.

Using “Asynchronous URL Scanner in Laravel” in a Live Project

Integrate the script into scheduled jobs, migrate data with confidence, and automate your link-checking workflows with ease.

Frequently Asked Questions

1. How does asynchronous URL scanning speed up validation?

It processes multiple URLs at the same time instead of waiting for each response separately.

2. Can I scan millions of URLs with this approach?

Yes—chunking and async promises make large workloads manageable.

3. Does asynchronous processing increase memory usage?

Not significantly, because chunks limit how many URLs load at once.

4. What if a URL takes too long to respond?

Use timeout settings inside the Scanner class to skip slow responses.

5. Can this work with APIs beyond simple URL status checks?

Absolutely—any network-based validation can benefit.

6. Where can I learn more about async PHP techniques?

Check out ReactPHP’s documentation or Laravel Octane guides.

(External link example: https://reactphp.org

)

Conclusion

Building an Asynchronous URL Scanner in Laravel is one of the most efficient ways to process massive link datasets. With chunking, asynchronous promises, and benchmark reporting, you can create fast, clean, and scalable URL validation pipelines suitable for enterprise workloads.

Comments

Great Tools for Developers

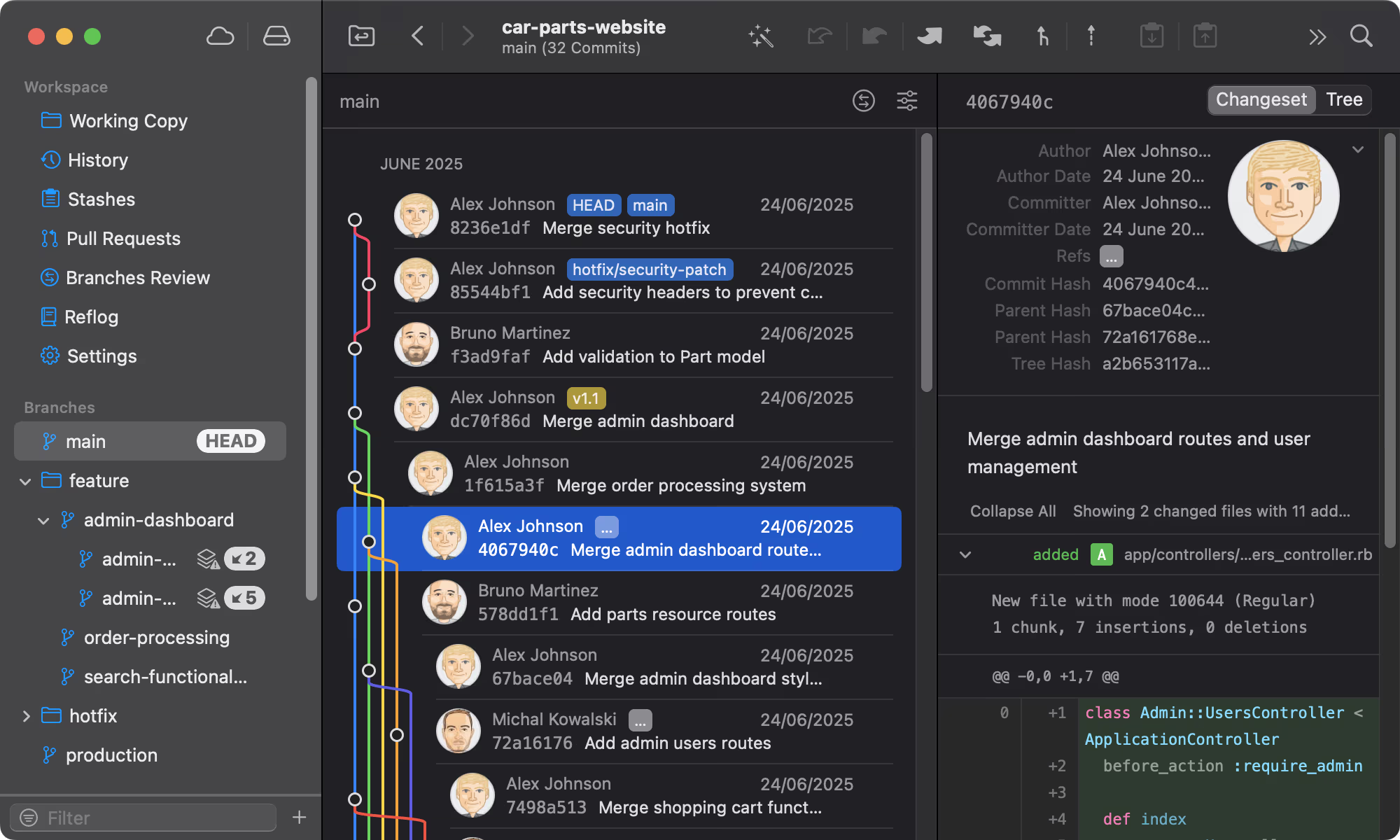

Git tower

A powerful Git client for Mac and Windows that simplifies version control.

Mailcoach's

Self-hosted email marketing platform for sending newsletters and automated emails.

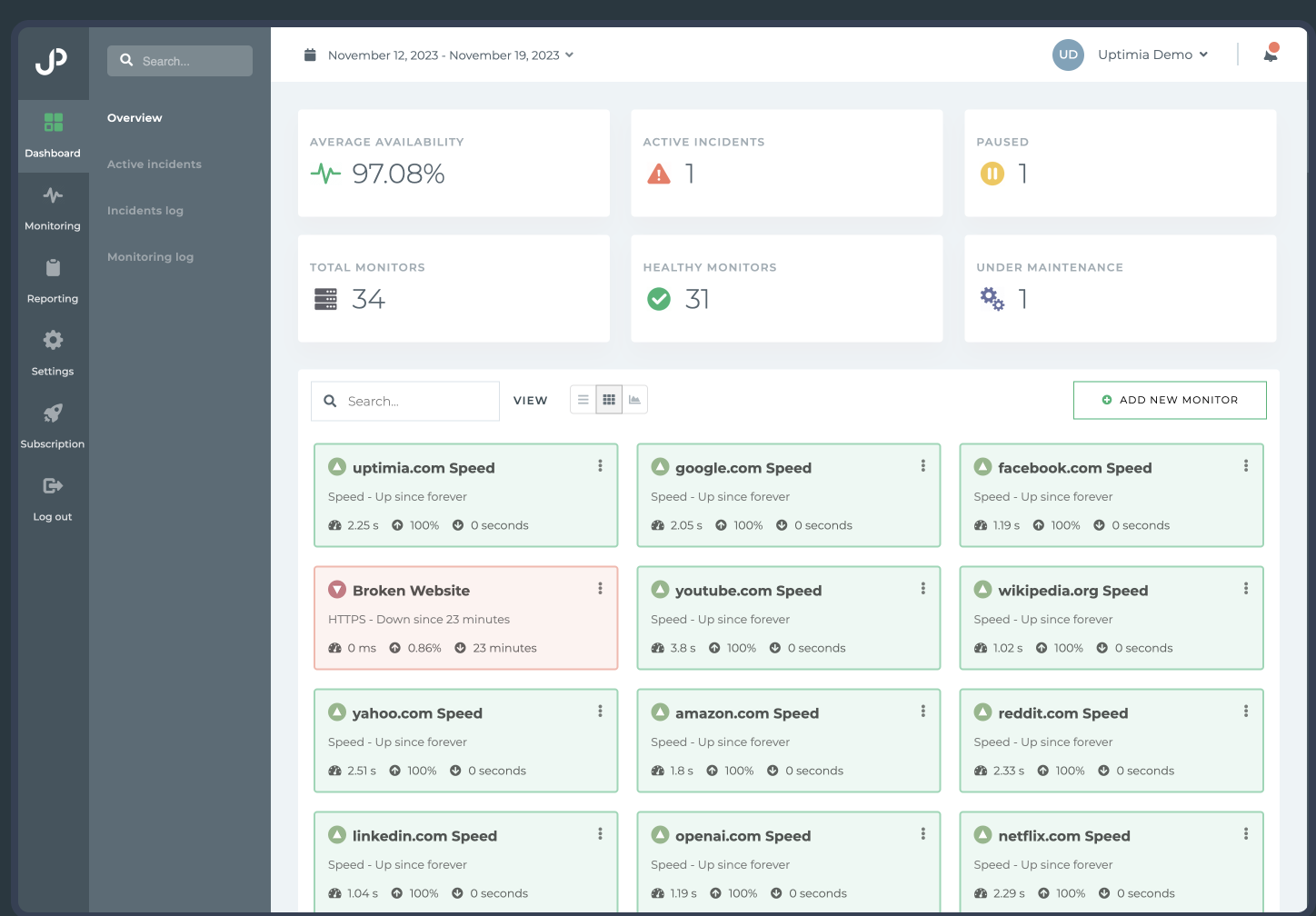

Uptimia

Website monitoring and performance testing tool to ensure your site is always up and running.

Please login to leave a comment.